4.4 KiB

Executable File

sauron-cv

The all-seeing computer vision client/server architecture.

Fast, centralized computer vision (CV) and machine learning (ML) processing for distributed autonomous compute / robotics workflows.

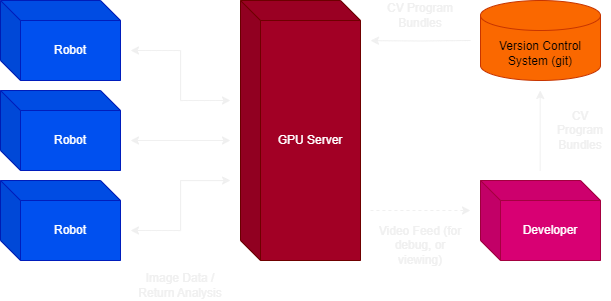

Architecture

The Sauron architecture spans across multiple devices; Clients with limited hardware capabilities (i.e. autonomous robots, embedded hardware platforms) can stream image data to a central server with more substantial video processing power (such as multiple GPUs), which can run custom computer vision scripts and send back analysis data to the clients.

Developers can write these scripts and upload them directly to the server via a secure file transfer method (like rsync or SCP), a version control system (such as git), or through the optional administrative web interface.

Computer Vision Server

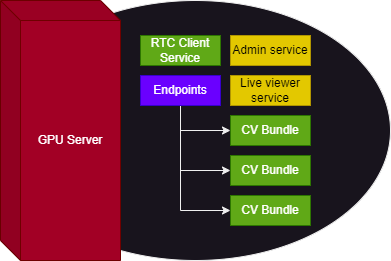

The server runs a real-time communications (RTC) service (likely running HTTP3/QUIC) that handles communications from clients and, depending on which endpoint is triggered, passes off the input to the appropriate CV script, and then sends back the return value to the client.

An optional web interface for live viewing camera feeds (and CV processing), and another optional web interface for managing CV scripts, are both available for installation, but can be omitted for performance and/or security.

Embedded Clients

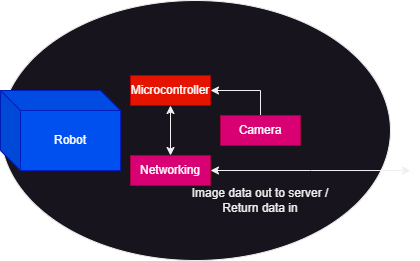

We will demonstrate a client setup on a robot, which has a camera that Sauron will process and return data/commands to a microcontroller.

In this first example, the robot has a camera integrated into the design, with the main controller reading the video feed. This data is transmitted via a low-latency network protocol to the server, which will process this camera feed using a CV bundle. Response data is streamed back using the same feed, and the microcontroller will interpret the data accordingly.

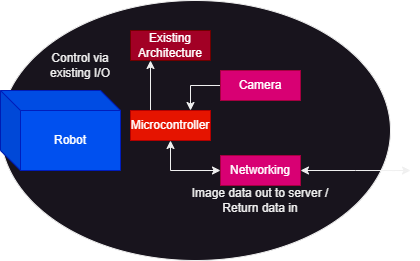

In this example, the robot did not originally have a camera installed, but has been setup with an additional microcontroller and networking hardware. This new microcontroller handles sending the image data to the server, receiving the response data stream, interpreting it, and then controlling the existing architecture using existing I/O.

Therefore, by utilizing more powerful centralized hardware, one can add computer vision capabilities to multiple robots with as little as an Arduino, an SPI camera, and a Wi-Fi module for roughly $50. This is considerably less than buying hardware with integrated GPU capabilities, such as the Nvidia Jetson Nano (but you could buy one or two of those to run your server on!).

Additionally, the smaller microcontrollers can fit in more compact form factors, ideal for other embedded hardware.

Subprojects

This repository is divided into subprojects for each part of the Sauron stack:

- sauron-server: Runs on the server side, ideally GPU-supported hardware. Handles real-time communication with clients and directing video streams to CV scripts.

- sauron-client: Pre-written scripts/libraries for interpreting camera data and transmitting to Sauron for various microcontrollers.

- sauron-api: Developer API for writing CV scripts for use on the Sauron server. Developed to support either C++ or Python.

- sauron-admin: Optional administrator console web service. Allows for managing CV scripts, authorized connections, etc.

- sauron-liveview: Optional live view web service. Allows for viewing connected video streams and results of running CV scripts.

More Info

See our roadmap for more details.

License

This project is licensed under the GNU General Public License, Version 3. The license is available for review in this repository, or directly from GNU.

Third-Party Open Source Libraries

The following third-party open source libraries are used in this product under their respective licenses:

- OpenCV: Apache License v2 (prior to 4.5.0: 3-Clause BSD License)